[Abstract]

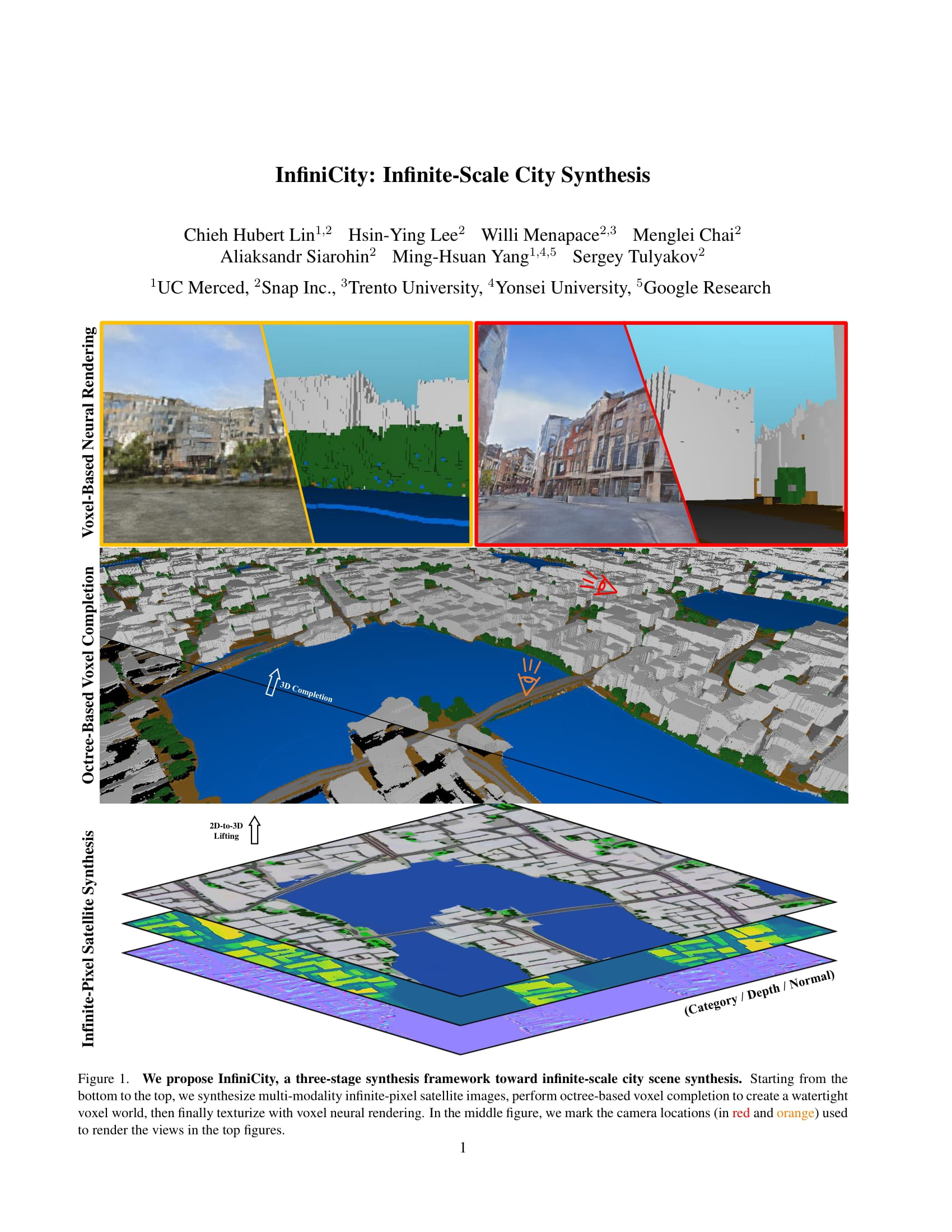

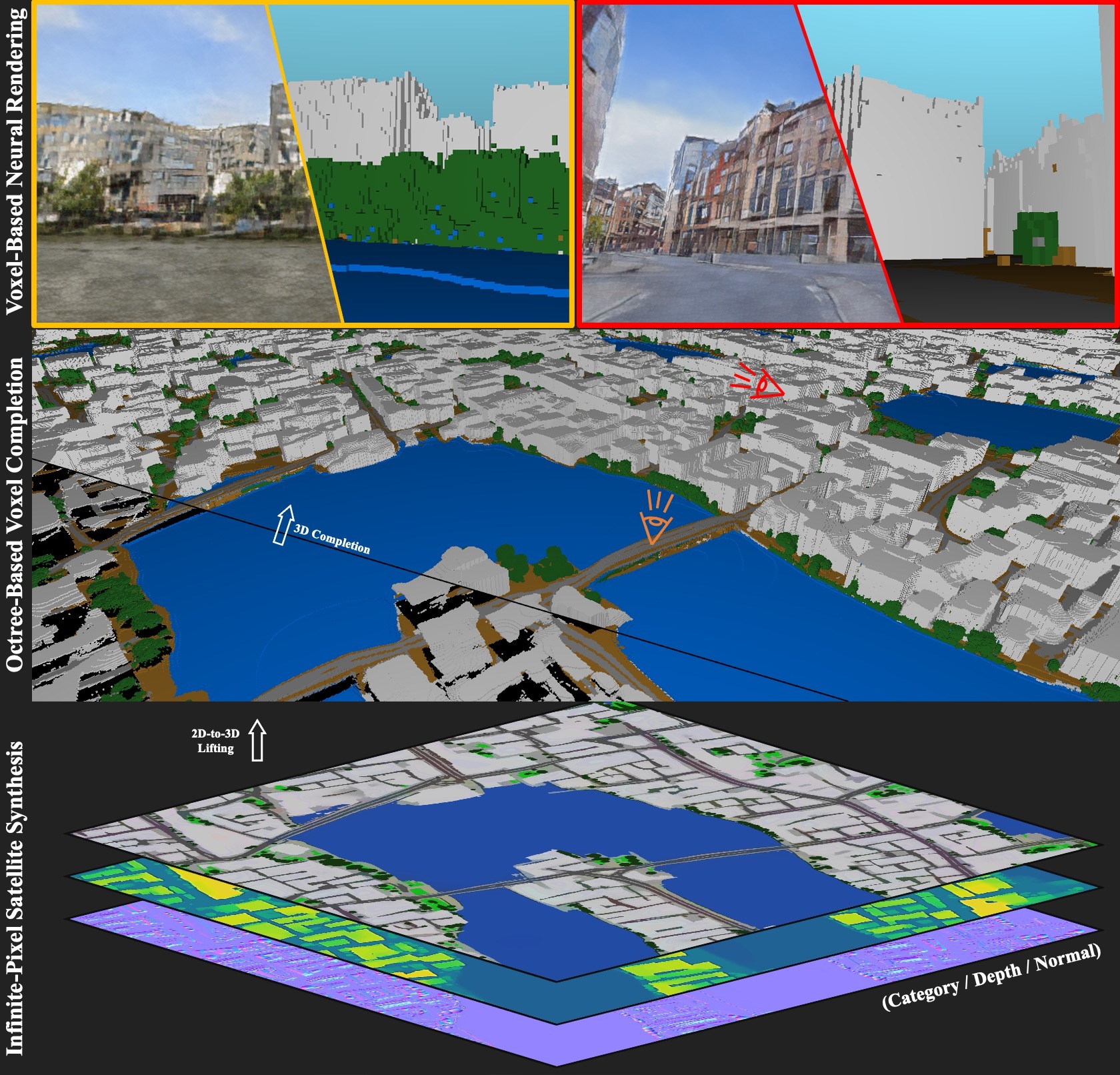

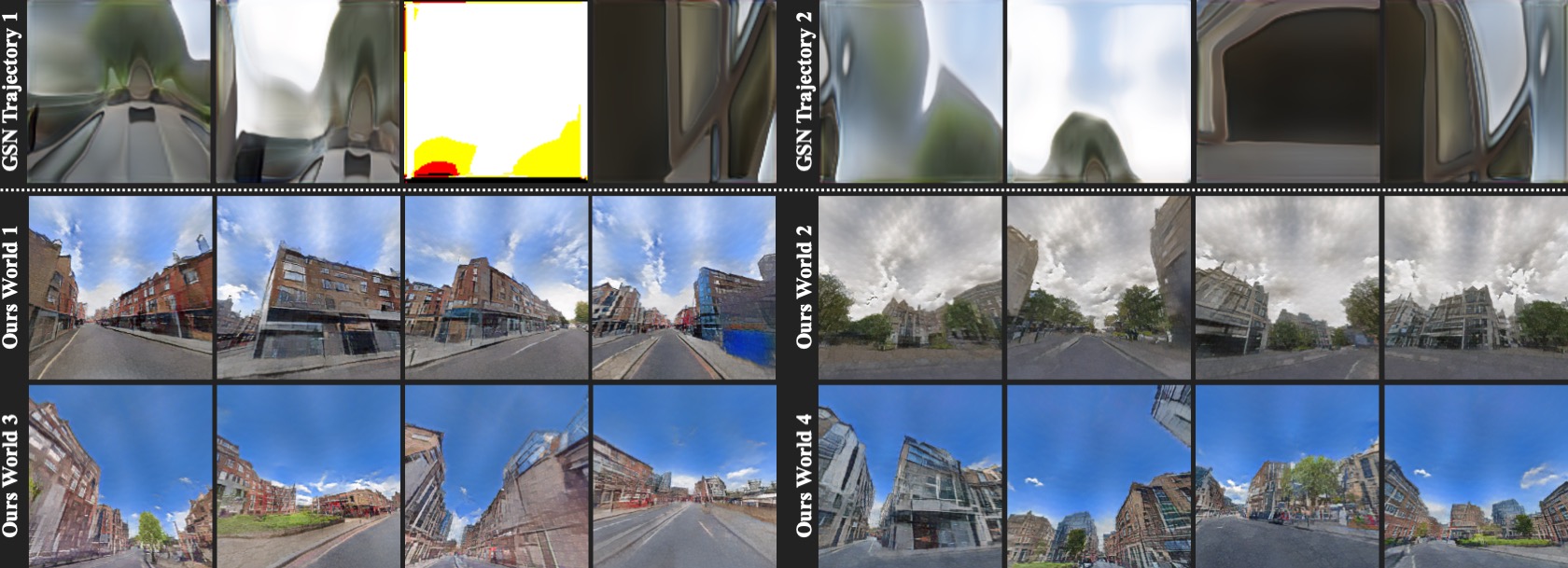

Toward infinite-scale 3D city synthesis, we propose a novel framework, InfiniCity, which constructs and renders an unconstrainedly large and 3D-grounded environment from random noises. InfiniCity decomposes the seemingly impractical task into three feasible modules, taking advantage of both 2D and 3D data. First, an infinite-pixel image synthesis module generates arbitrary-scale 2D maps from the bird's-eye view. Next, an octree-based voxel completion module lifts the generated 2D map to 3D octrees. Finally, a voxel-based neural rendering module texturizes the voxels and renders 2D images. InfiniCity can thus synthesize arbitrary-scale and traversable 3D city environments, and allow flexible and interactive editing from users. We quantitatively and qualitatively demonstrate the efficacy of the proposed framework.

[Paper]

[Citation]

@inproceedings{lin2023infinicity,

title={Infini{C}ity: Infinite-Scale City Synthesis},

author={Lin, Chieh Hubert and Lee, Hsin-Ying and Menapace, Willi and Chai, Menglei and Siarohin, Aliaksandr and Yang, Ming-Hsuan and Tulyakov, Sergey},

booktitle={Proceedings of the IEEE/CVF international conference on computer vision},

year={2023},

}

Framework Overview

Starting from the bottom to the top, we synthesize , perform to create a watertight voxel world, then finally texturize with . In the middle figure, we mark the camera locations (in red and orange) used to render the views in the top figures.

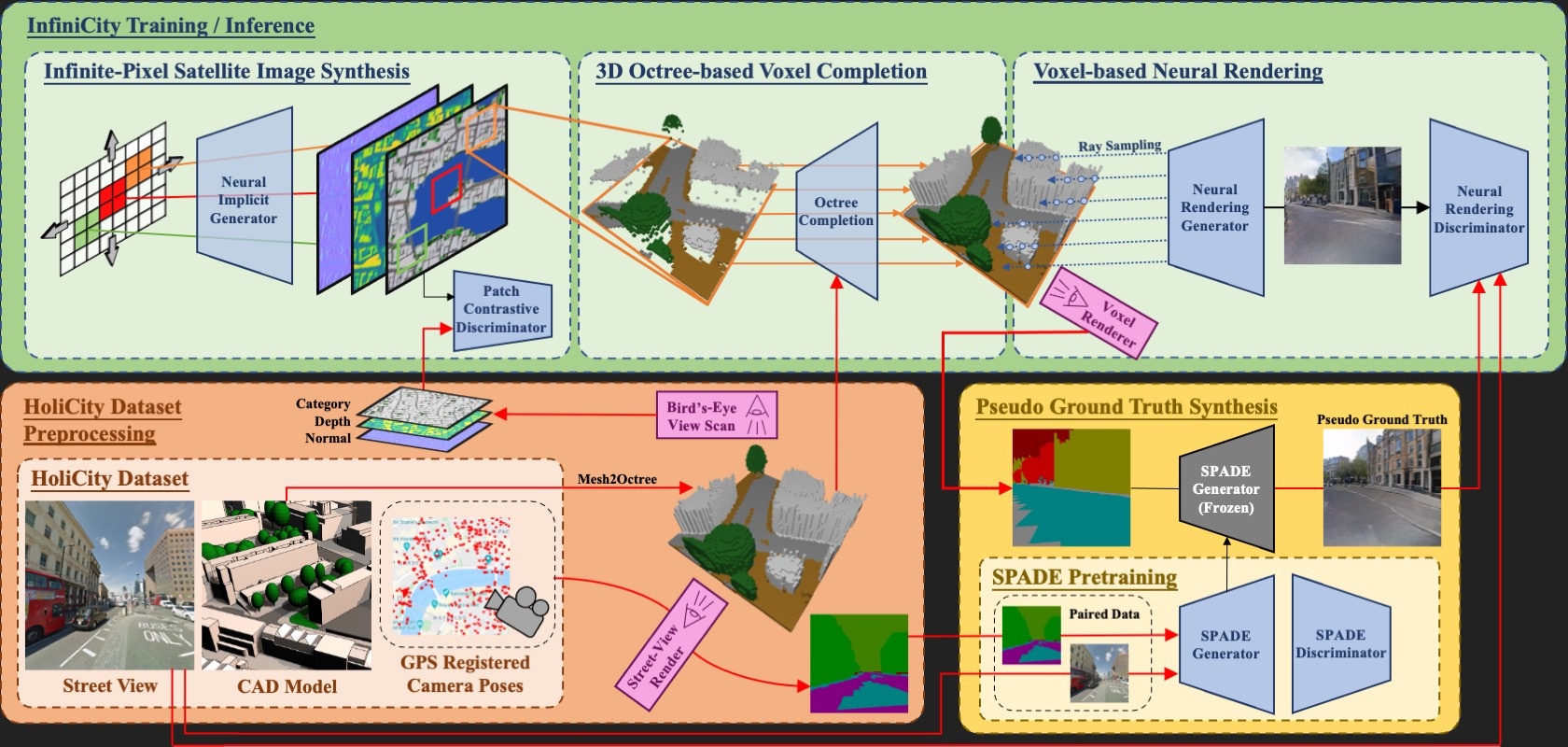

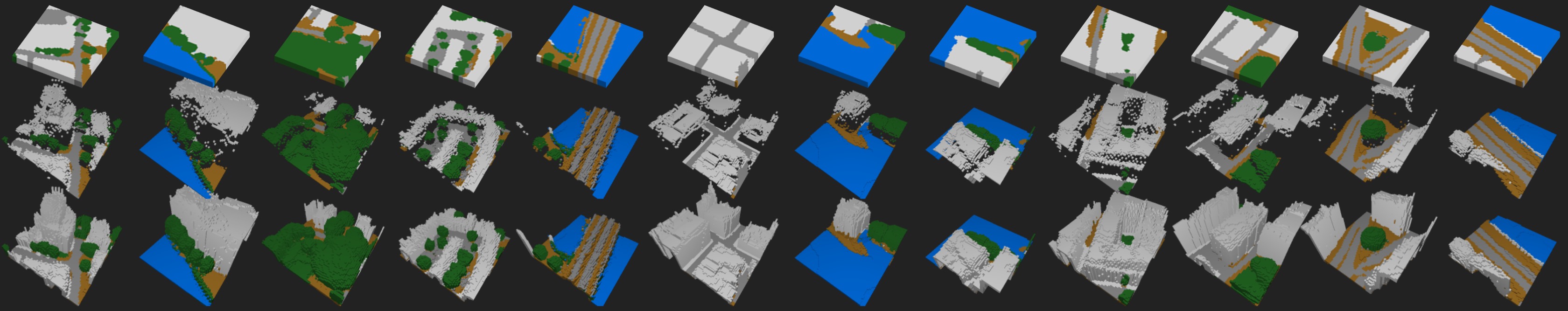

Methodology

InfiniCity consists of three major modules. The ▶ Infinite-pixel satellite image synthesis stage is trained on image tuples (category, depth, and normal maps) derived from a bird's-eye view scan of the 3D environment, and is able to synthesize arbitrary- scale satellite maps during inference. The ▶ 3D octree-based voxel completion stage is trained on pairs of surface-scanned and completed octrees. During inference, it takes the surface voxels lifted from the satellite images as inputs and produces the watertight voxel world. Finally, the ▶ voxel-based neural rendering stage performs ray-sampling to retrieve features from the voxel world, then renders the final image with a neural renderer. The neural renderer is trained with both real images and pseudo-ground-truths synthesized by a pretrained SPADE generator. With these modules, InfiniCity can synthesize an arbitrary-scale and traversable 3D city environment from noises.