|

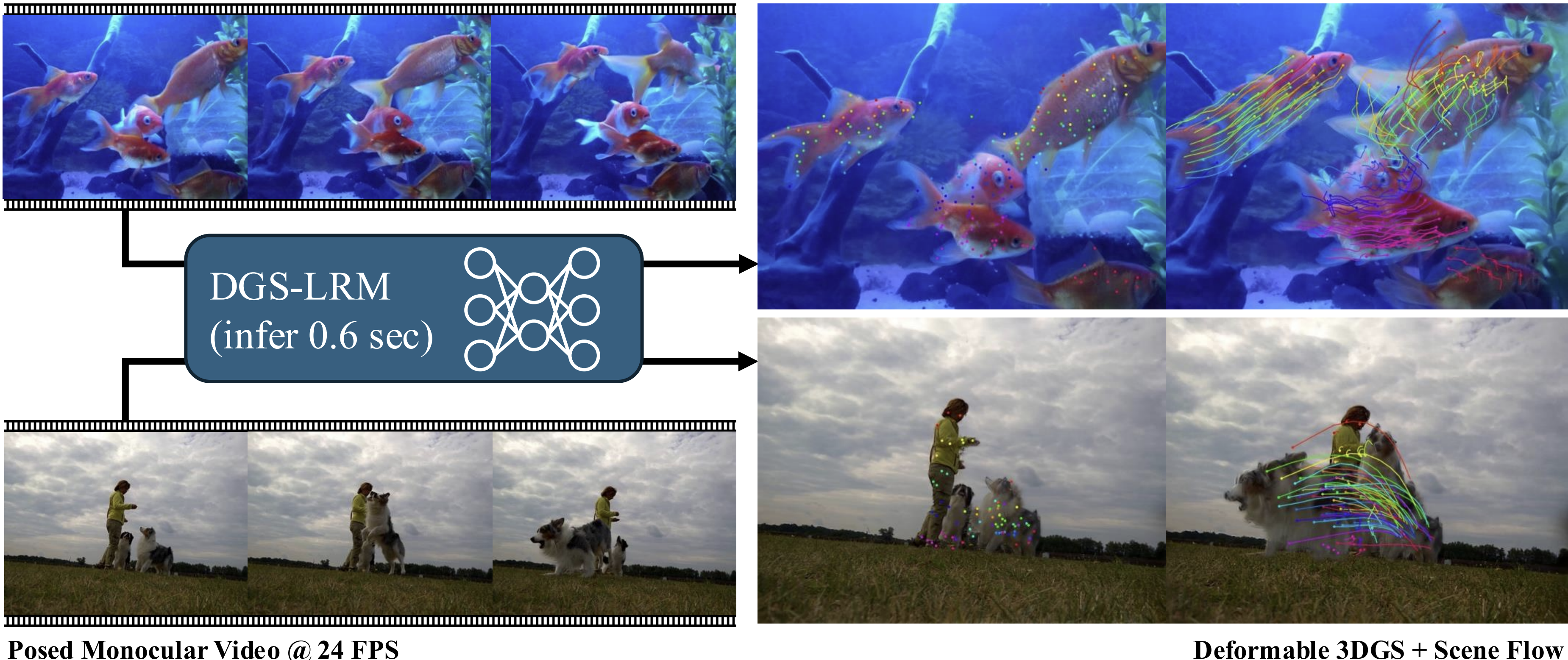

DGS-LRM: Real-Time Deformable 3D Gaussian Reconstruction From Monocular Videos

Chieh Hubert Lin, Zhaoyang Lv, Songyin Wu, Zhen Xu, Thu Nguyen-Phuoc, Hung-Yu Tseng, Julian Straub, Numair Khan, Lei Xiao, Ming-Hsuan Yang, Yuheng Ren, Richard Newcombe, Zhao Dong, Zhengqin Li

NeurIPS 2025

[abs]

[paper]

[project page]

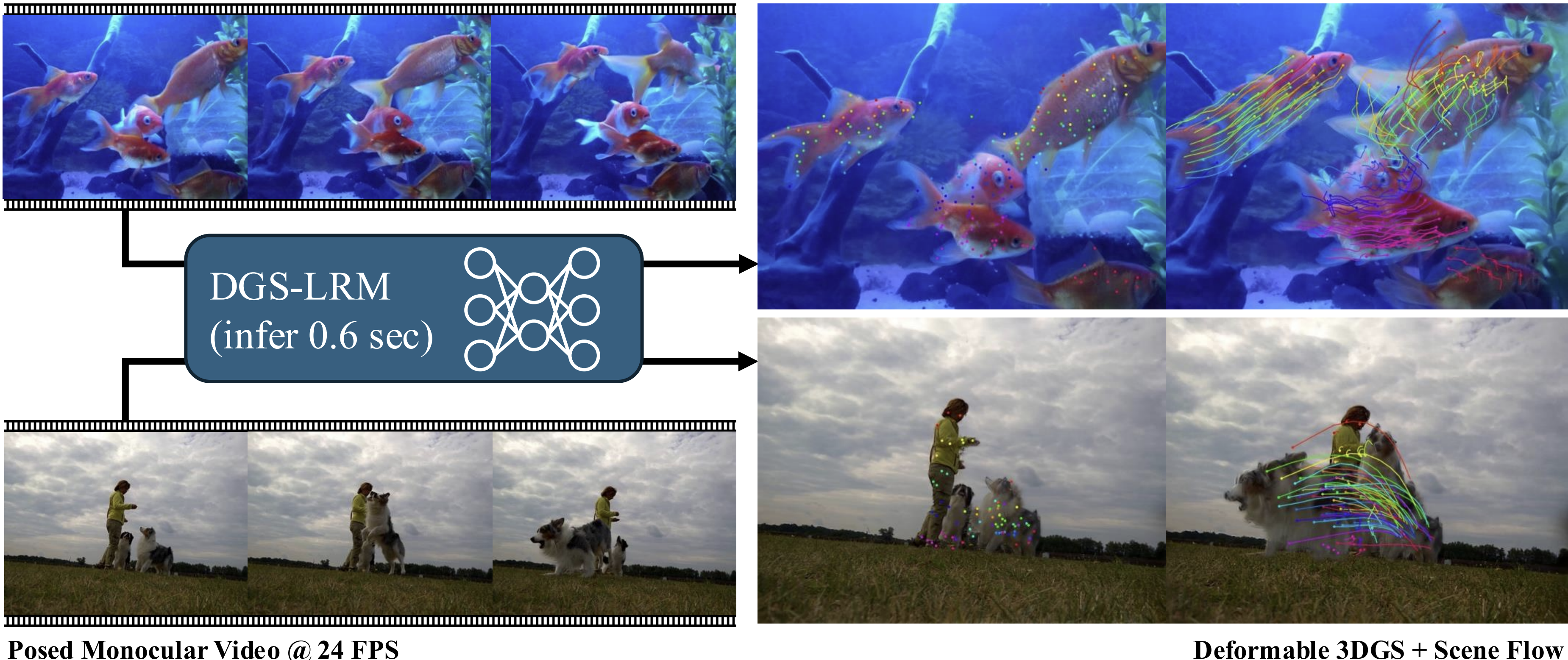

We introduce the Deformable Gaussian Splats Large Reconstruction Model (DGS-LRM), the first feed-forward method predicting deformable

3D Gaussian splats from a monocular posed video of any dynamic scene. Feed-forward scene reconstruction has gained significant attention

for its ability to rapidly create digital replicas of real-world environments. However, most existing models are limited to static scenes

and fail to reconstruct the motion of moving objects. Developing a feed-forward model for dynamic scene reconstruction poses significant

challenges, including the scarcity of training data and the need for appropriate 3D representations and training paradigms. To address

these challenges, we introduce several key technical contributions: an enhanced large-scale synthetic dataset with ground-truth multi-view

videos and dense 3D scene flow supervision; a per-pixel deformable 3D Gaussian representation that is easy to learn, supports high-quality

dynamic view synthesis, and enables long-range 3D tracking; and a large transformer network that achieves real-time, generalizable dynamic

scene reconstruction. Extensive qualitative and quantitative experiments demonstrate that DGS-LRM achieves dynamic scene reconstruction

quality comparable to optimization-based methods, while significantly outperforming the state-of-the-art predictive dynamic reconstruction

method on real-world examples. Its predicted physically grounded 3D deformation is accurate and can readily adapt for long-range 3D tracking

tasks, achieving performance on par with state-of-the-art monocular video 3D tracking methods.

|

|

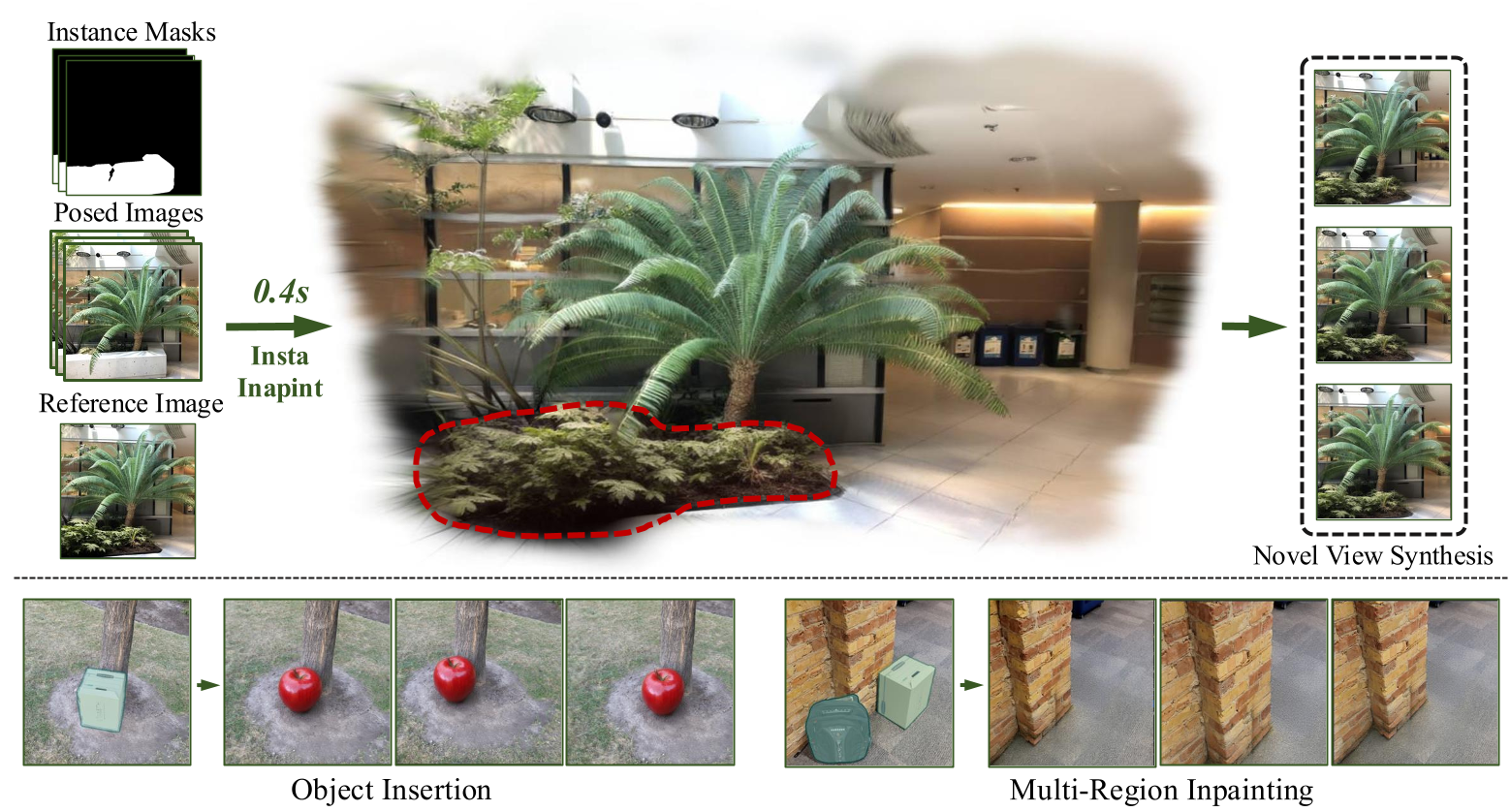

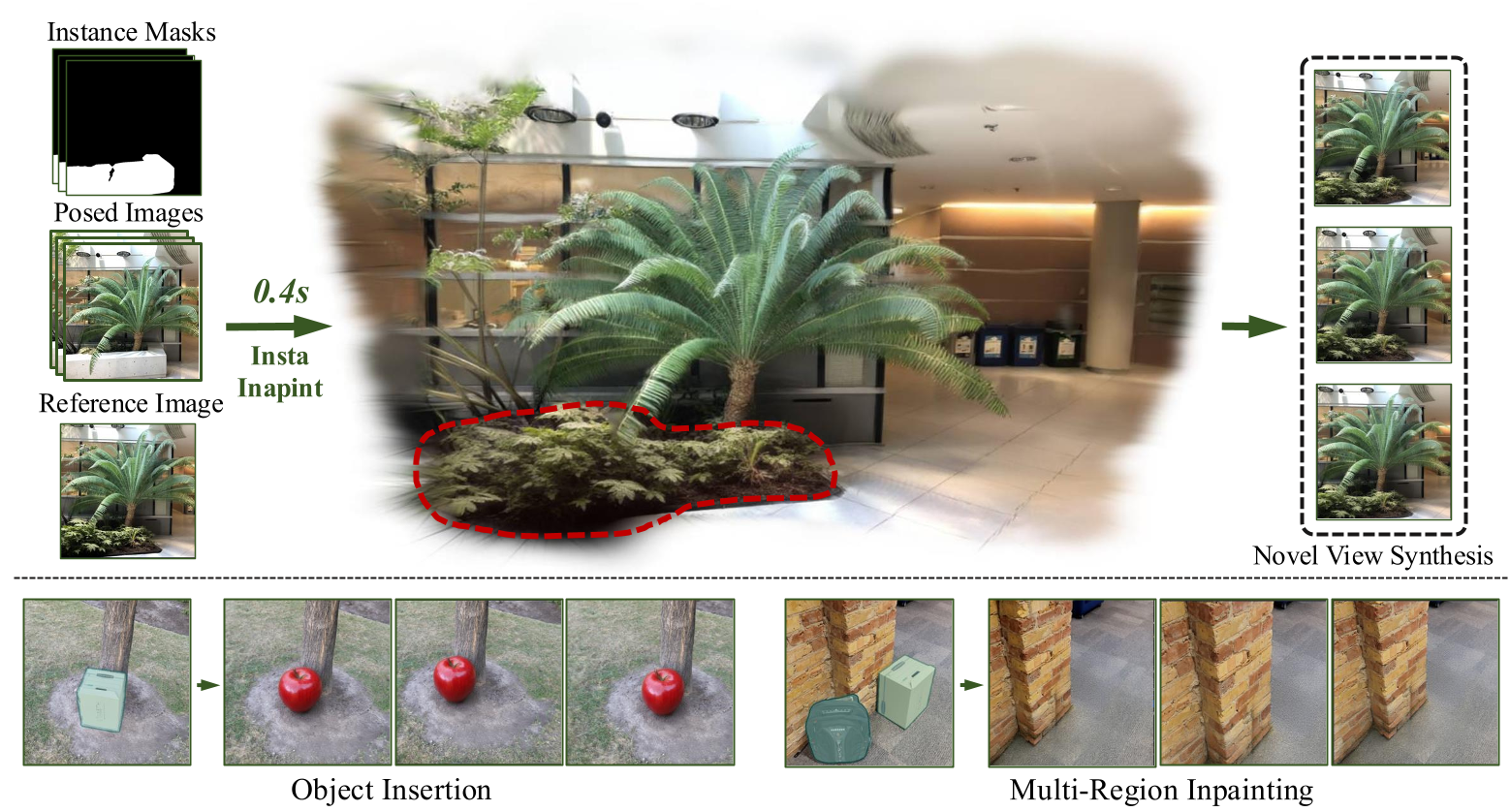

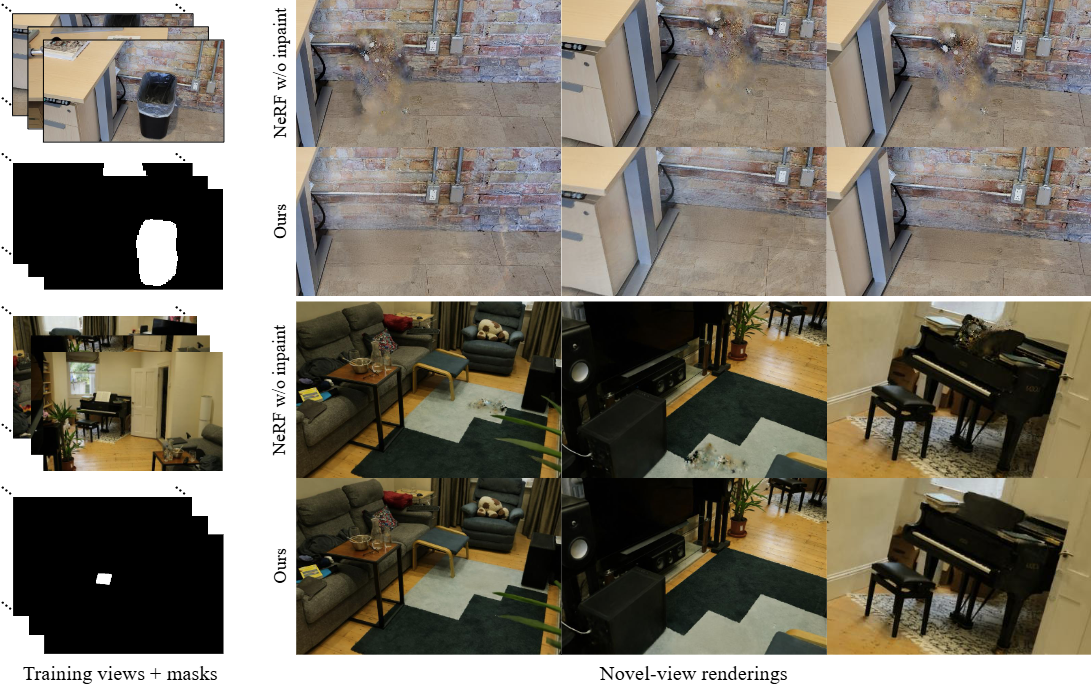

InstaInpaint: Instant 3D-Scene Inpainting with Masked Large Reconstruction Model

Junqi You, Chieh Hubert Lin, Weijie Lyu, Zhengbo Zhang, Ming-Hsuan Yang

NeurIPS 2025

[abs]

[paper]

[project page]

Recent advances in 3D scene reconstruction enable real-time viewing in virtual and augmented reality.

To support interactive operations for better immersiveness, such as moving or editing objects, 3D scene

inpainting methods are proposed to repair or complete the altered geometry. However, current approaches

rely on lengthy and computationally intensive optimization, making them impractical for real-time or

online applications. We propose InstaInpaint, a reference-based feed-forward framework that produces

3D-scene inpainting from a 2D inpainting proposal within 0.4 seconds. We develop a self-supervised

masked-finetuning strategy to enable training of our custom large reconstruction model (LRM) on the

large-scale dataset. Through extensive experiments, we analyze and identify several key designs that

improve generalization, textural consistency, and geometric correctness. InstaInpaint achieves a 1000x

speed-up from prior methods while maintaining a state-of-the-art performance across two standard benchmarks.

Moreover, we show that InstaInpaint generalizes well to flexible downstream applications such as object

insertion and multi-region inpainting.

|

|

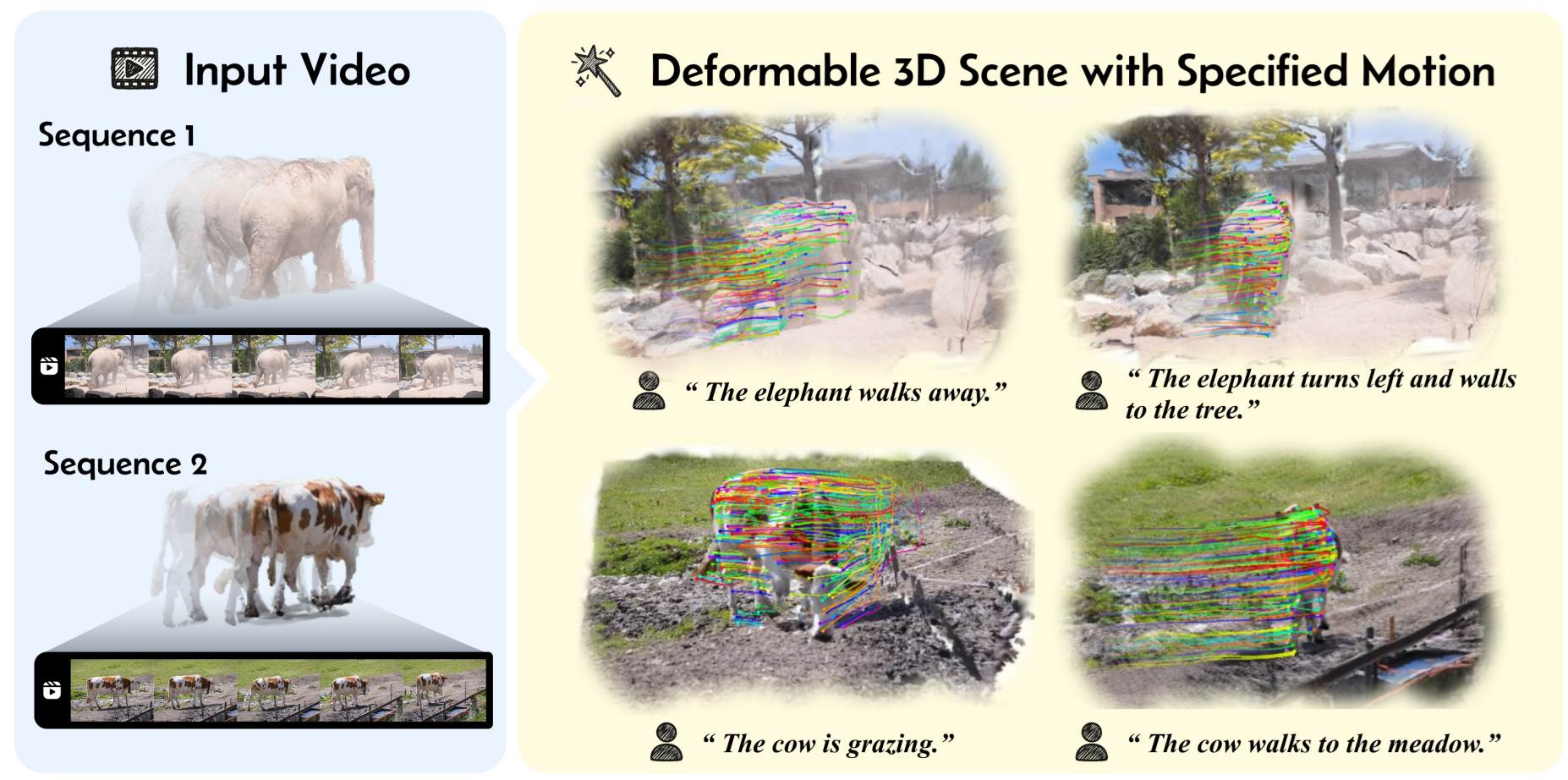

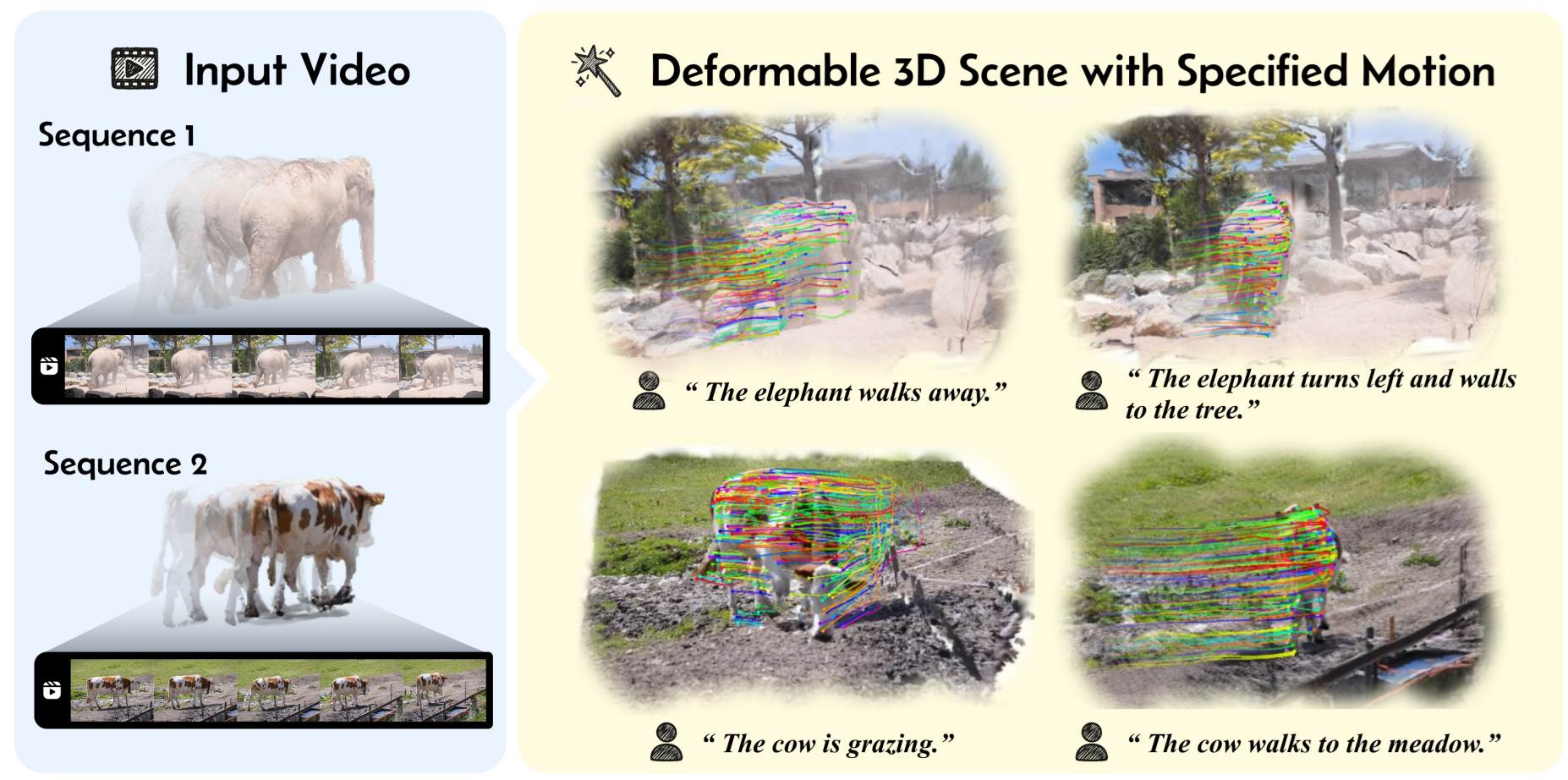

Restage4D: Reanimating Deformable 3D Reconstruction from a Single Video

Jixuan He, Chieh Hubert Lin, Lu Qi, Ming-Hsuan Yang

NeurIPS 2025

[abs]

[paper]

Creating deformable 3D content has gained increasing attention with the rise of text-to-image

and image-to-video generative models. While these models provide rich semantic priors for appearance,

they struggle to capture the physical realism and motion dynamics needed for authentic 4D scene synthesis.

In contrast, real-world videos can provide physically grounded geometry and articulation cues that are

difficult to hallucinate. One question is raised: \textit{Can we generate physically consistent 4D content

by leveraging the motion priors of the real-world video}? In this work, we explore the task of reanimating

deformable 3D scenes from a single video, using the original sequence as a supervisory signal to correct

artifacts from synthetic motion. We introduce \textbf{Restage4D}, a geometry-preserving pipeline for

video-conditioned 4D restaging. Our approach uses a video-rewinding training strategy to temporally

bridge a real base video and a synthetic driving video via a shared motion representation. We further

incorporate an occlusion-aware rigidity loss and a disocclusion backtracing mechanism to improve

structural and geometry consistency under challenging motion. We validate Restage4D on DAVIS and

PointOdyssey, demonstrating improved geometry consistency, motion quality, and 3D tracking performance.

Our method not only preserves deformable structure under novel motion, but also automatically corrects

errors introduced by generative models, revealing the potential of video prior in 4D restaging task.

|

|

|

Skyfall-GS: Synthesizing Immersive 3D Urban Scenes from Satellite Imagery

Jie-Ying Lee, Yi-Ruei Liu, Shr-Ruei Tsai, Wei-Cheng Chang, Chung-Ho Wu, Jiewen Chan, Zhenjun Zhao, Chieh Hubert Lin, Yu-Lun Liu

ArXiv 2025

[abs]

[paper]

[project page]

Synthesizing large-scale, explorable, and geometrically accurate 3D urban scenes is a challenging yet valuable

task in providing immersive and embodied applications. The challenges lie in the lack of large-scale and high-quality

real-world 3D scans for training generalizable generative models. In this paper, we take an alternative route to

create large-scale 3D scenes by synergizing the readily available satellite imagery that supplies realistic coarse

geometry and the open-domain diffusion model for creating high-quality close-up appearances. We propose \textbf{Skyfall-GS},

the first city-block scale 3D scene creation framework without costly 3D annotations, also featuring real-time,

immersive 3D exploration. We tailor a curriculum-driven iterative refinement strategy to progressively enhance

geometric completeness and photorealistic textures. Extensive experiments demonstrate that Skyfall-GS provides

improved cross-view consistent geometry and more realistic textures compared to state-of-the-art approaches.

|

|

Towards Affordance-Aware Articulation Synthesis for Rigged Objects

Yu-Chu Yu, Chieh Hubert Lin, Hsin-Ying Lee, Chaoyang Wang, Yu-Chiang Frank Wang, Ming-Hsuan Yang

Arxiv

[abs]

[paper]

[project page]

Rigged objects are commonly used in artist pipelines, as they can flexibly adapt to different

scenes and postures. However, articulating the rigs into realistic affordance-aware postures

(e.g., following the context, respecting the physics and the personalities of the object) remains

time-consuming and heavily relies on human labor from experienced artists. In this paper, we

tackle the novel problem and design A3Syn. With a given context, such as the environment mesh

and a text prompt of the desired posture, A3Syn synthesizes articulation parameters for arbitrary

and open-domain rigged objects obtained from the Internet. The task is incredibly challenging

due to the lack of training data, and we do not make any topological assumptions about the open-domain

rigs. We propose using 2D inpainting diffusion model and several control techniques to synthesize

in-context affordance information. Then, we develop an efficient bone correspondence alignment using

a combination of differentiable rendering and semantic correspondence. A3Syn has stable convergence,

completes in minutes, and synthesizes plausible affordance on different combinations of in-the-wild

object rigs and scenes.

|

|

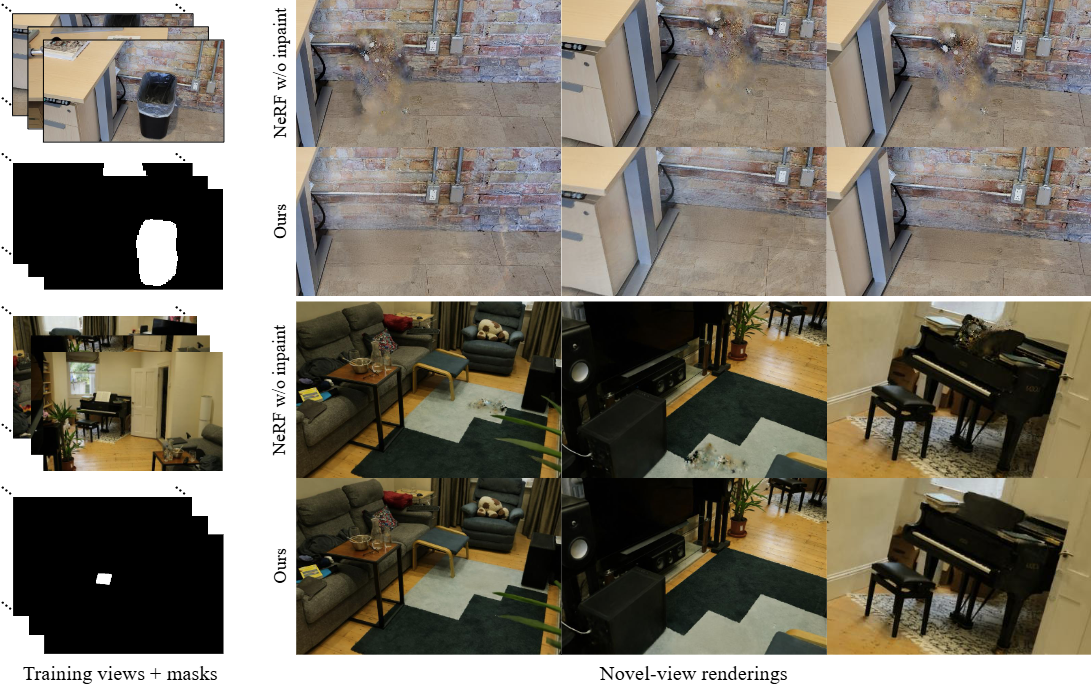

Taming Latent Diffusion Model for Neural Radiance Field Inpainting

Chieh Hubert Lin, Changil Kim, Jia-Bin Huang, Qinbo Li, Chih-Yao Ma, Johannes Kopf, Ming-Hsuan Yang, Hung-Yu Tseng

ECCV 2024

[abs]

[paper]

[project page]

Neural Radiance Field (NeRF) is a representation for 3D reconstruction from multi-view images.

Despite some recent work showing preliminary success in editing a reconstructed NeRF with

diffusion prior, they remain struggling to synthesize reasonable geometry in completely

uncovered regions. One major reason is the high diversity of synthetic contents from the

diffusion model, which hinders the radiance field from converging to a crisp and

deterministic geometry. Moreover, applying latent diffusion models on real data often

yields a textural shift incoherent to the image condition due to auto-encoding errors.

These two problems are further reinforced with the use of pixel-distance losses. To address

these issues, we propose tempering the diffusion model's stochasticity with per-scene

customization and mitigating the textural shift with masked adversarial training. During

the analyses, we also found the commonly used pixel and perceptual losses are harmful in the

NeRF inpainting task. Through rigorous experiments, our framework yields state-of-the-art NeRF

inpainting results on various real-world scenes.

|

|

Virtual Pets: Animatable Animal Generation in 3D Scenes

Yen-Chi Cheng, Chieh Hubert Lin, Chaoyang Wang, Yash Kant, Sergey Tulyakov, Alexander Schwing, Liangyan Gui, Hsin-Ying Lee

ArXiv

[abs]

[paper]

[project page]

Toward unlocking the potential of generative models in immersive 4D experiences, we introduce

Virtual Pet, a novel pipeline to model realistic and diverse motions for target animal

species within a 3D environment. To circumvent the limited availability of 3D motion data

aligned with environmental geometry, we leverage monocular internet videos and extract

deformable NeRF representations for the foreground and static NeRF representations for

the background. For this, we develop a reconstruction strategy, encompassing species-level

shared template learning and per-video fine-tuning. Utilizing the reconstructed data,

we then train a conditional 3D motion model to learn the trajectory and articulation of

foreground animals in the context of 3D backgrounds. We showcase the efficacy of our

pipeline with comprehensive qualitative and quantitative evaluations using cat videos.

We also demonstrate versatility across unseen cats and indoor environments, producing

temporally coherent 4D outputs for enriched virtual experiences.

|

|

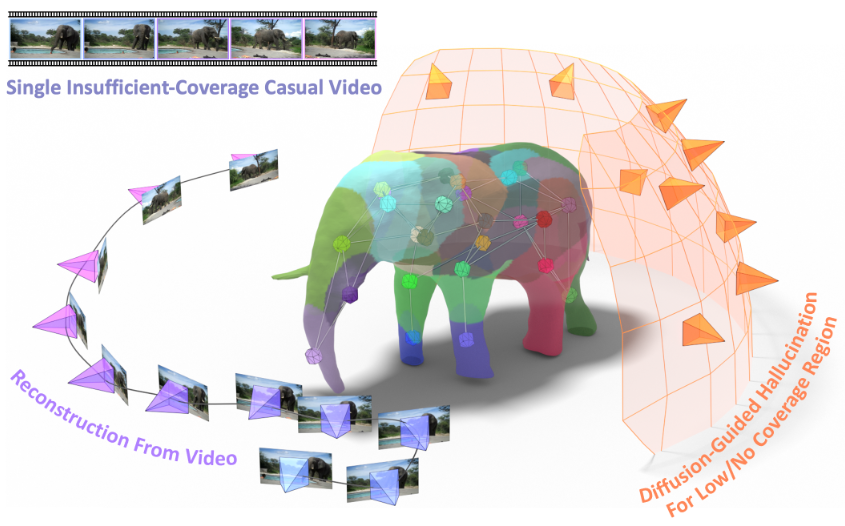

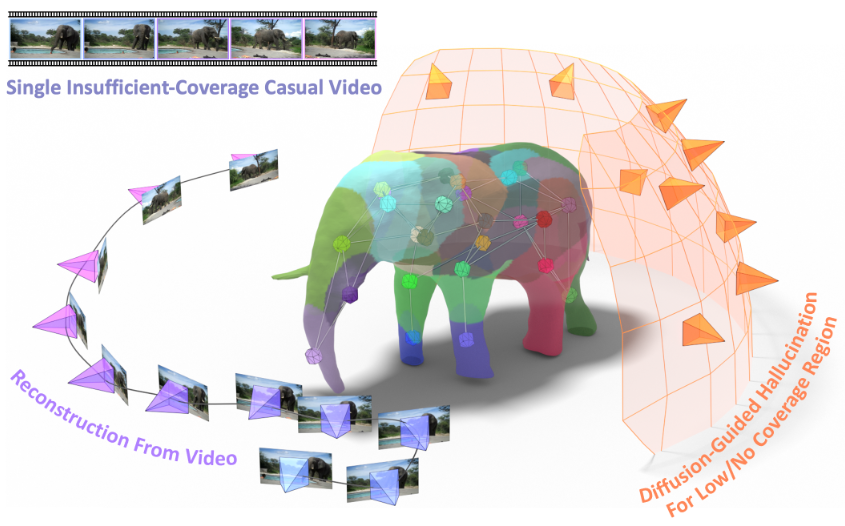

DreaMo: Articulated 3D Reconstruction From A Single Casual Video

Tao Tu, Ming-Feng Li, Chieh Hubert Lin, Yen-Chi Cheng, Min Sun, Ming-Hsuan Yang

WACV 2024

[abs]

[paper]

[project page]

Articulated 3D reconstruction has valuable applications in various domains, yet it remains costly and

demands intensive work from domain experts. Recent advancements in template-free learning methods

show promising results with monocular videos. Nevertheless, these approaches necessitate a

comprehensive coverage of all viewpoints of the subject in the input video, thus limiting their

applicability to casually captured videos from online sources. In this work, we study articulated

3D shape reconstruction from a single and casually captured internet video, where the subject's

view coverage is incomplete. We propose DreaMo that jointly performs shape reconstruction while

solving the challenging low-coverage regions with view-conditioned diffusion prior and several

tailored regularizations. In addition, we introduce a skeleton generation strategy to create

human-interpretable skeletons from the learned neural bones and skinning weights. We conduct

our study on a self-collected internet video collection characterized by incomplete view

coverage. DreaMo shows promising quality in novel-view rendering, detailed articulated shape

reconstruction, and skeleton generation. Extensive qualitative and quantitative studies validate

the efficacy of each proposed component, and show existing methods are unable to solve correct

geometry due to the incomplete view coverage.

|

|

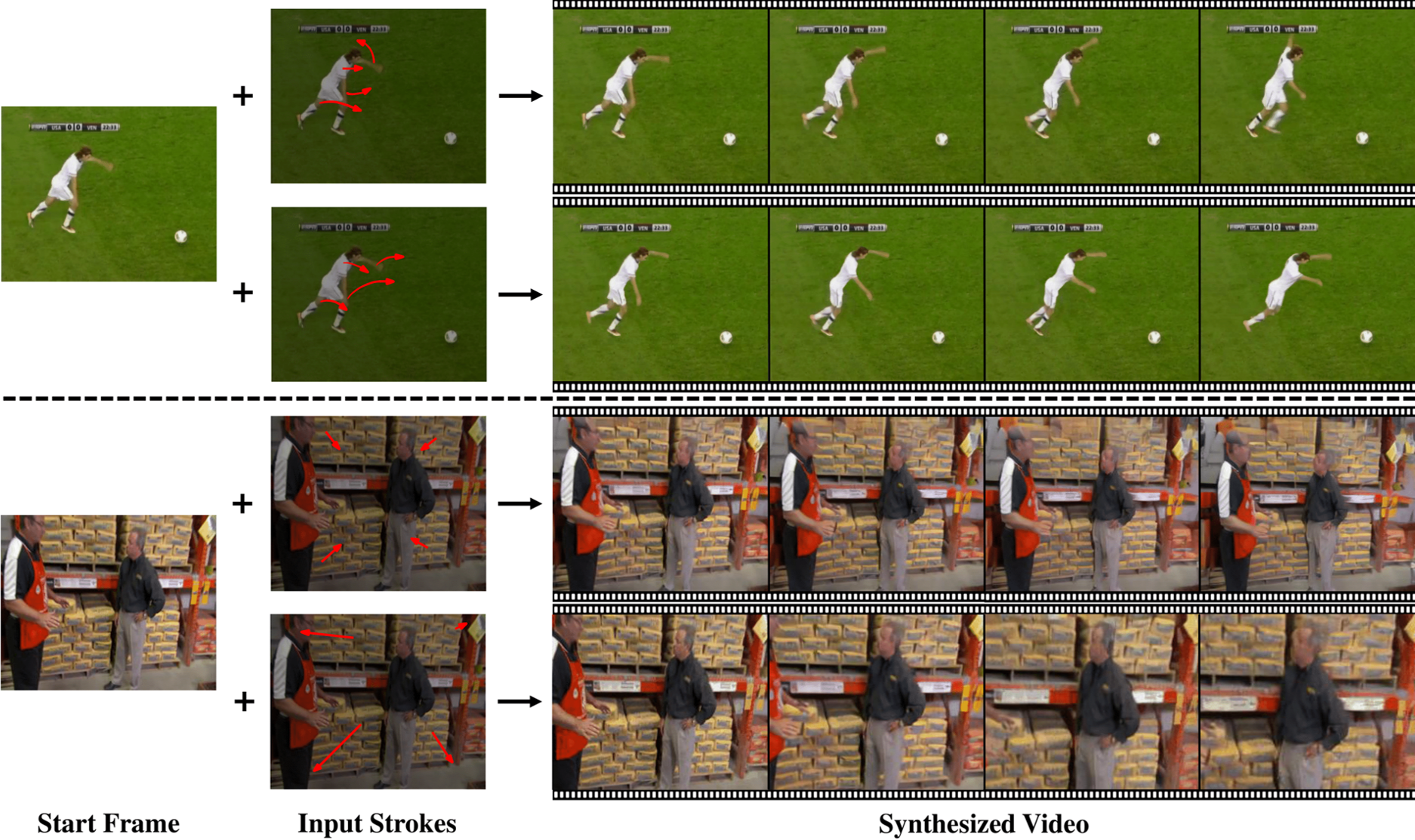

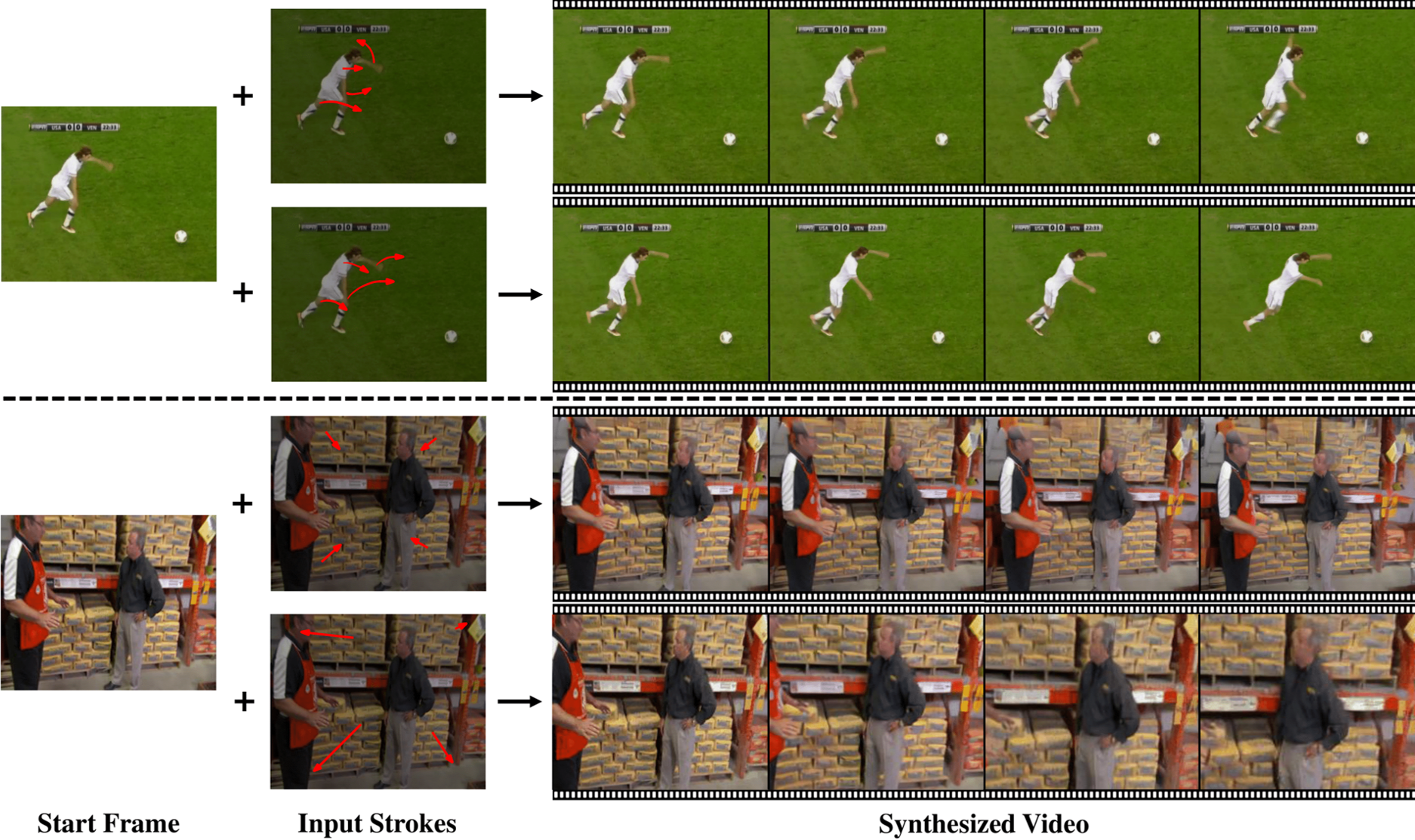

Motion-Conditioned Diffusion Model for Controllable Video Synthesis

Tsai-Shien Chen, Chieh Hubert Lin, Hung-Yu Tseng, Tsung-Yi Lin, Ming-Hsuan Yang

ArXiv

[abs]

[paper]

[project page]

Recent advancements in diffusion models have greatly improved the quality and diversity of synthesized

content. To harness the expressive power of diffusion models, researchers have explored various

controllable mechanisms that allow users to intuitively guide the content synthesis process.

Although the latest efforts have primarily focused on video synthesis, there has been a lack of

effective methods for controlling and describing desired content and motion. In response to this

gap, we introduce MCDiff, a conditional diffusion model that generates a video from a starting

image frame and a set of strokes, which allow users to specify the intended content and dynamics

for synthesis. To tackle the ambiguity of sparse motion inputs and achieve better synthesis

quality, MCDiff first utilizes a flow completion model to predict the dense video motion based

on the semantic understanding of the video frame and the sparse motion control. Then, the diffusion

model synthesizes high-quality future frames to form the output video. We qualitatively and

quantitatively show that MCDiff achieves the state-the-of-art visual quality in stroke-guided

controllable video synthesis. Additional experiments on MPII Human Pose further exhibit the

capability of our model on diverse content and motion synthesis.

|

|

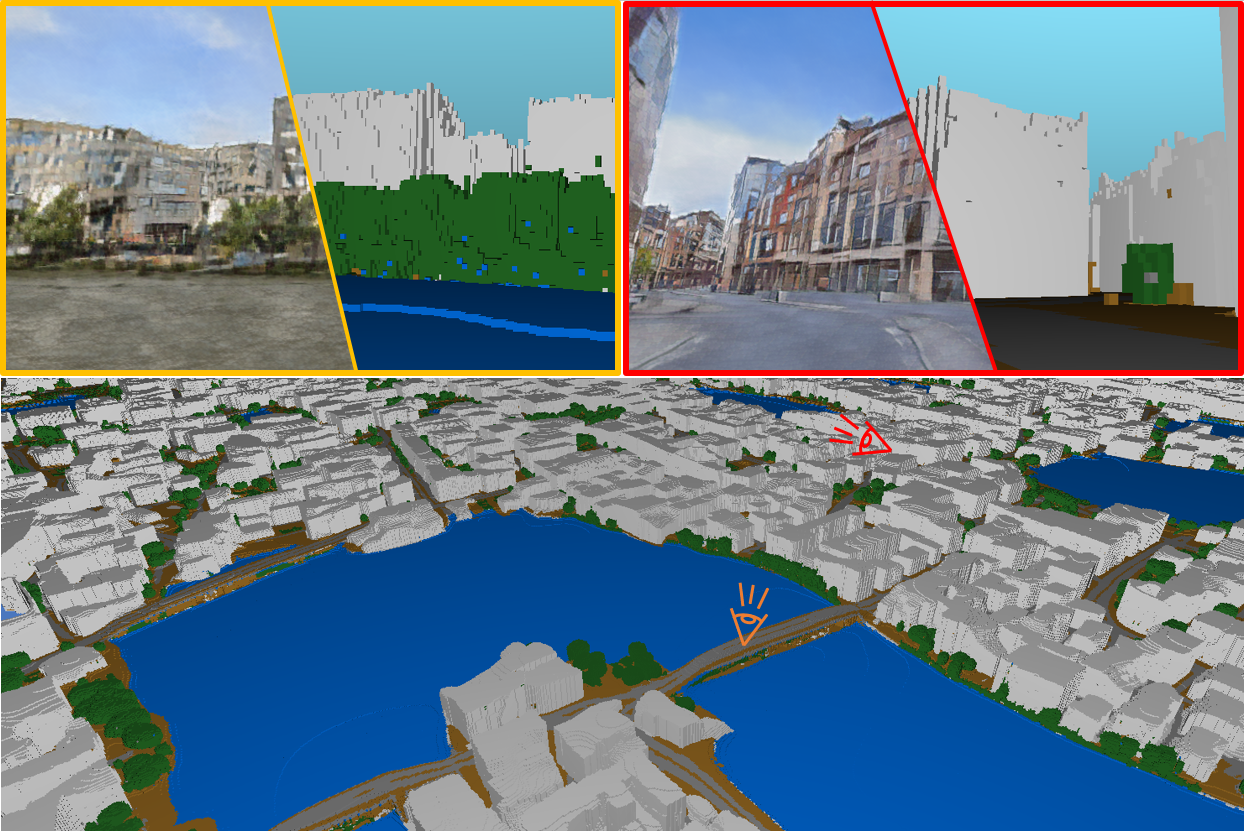

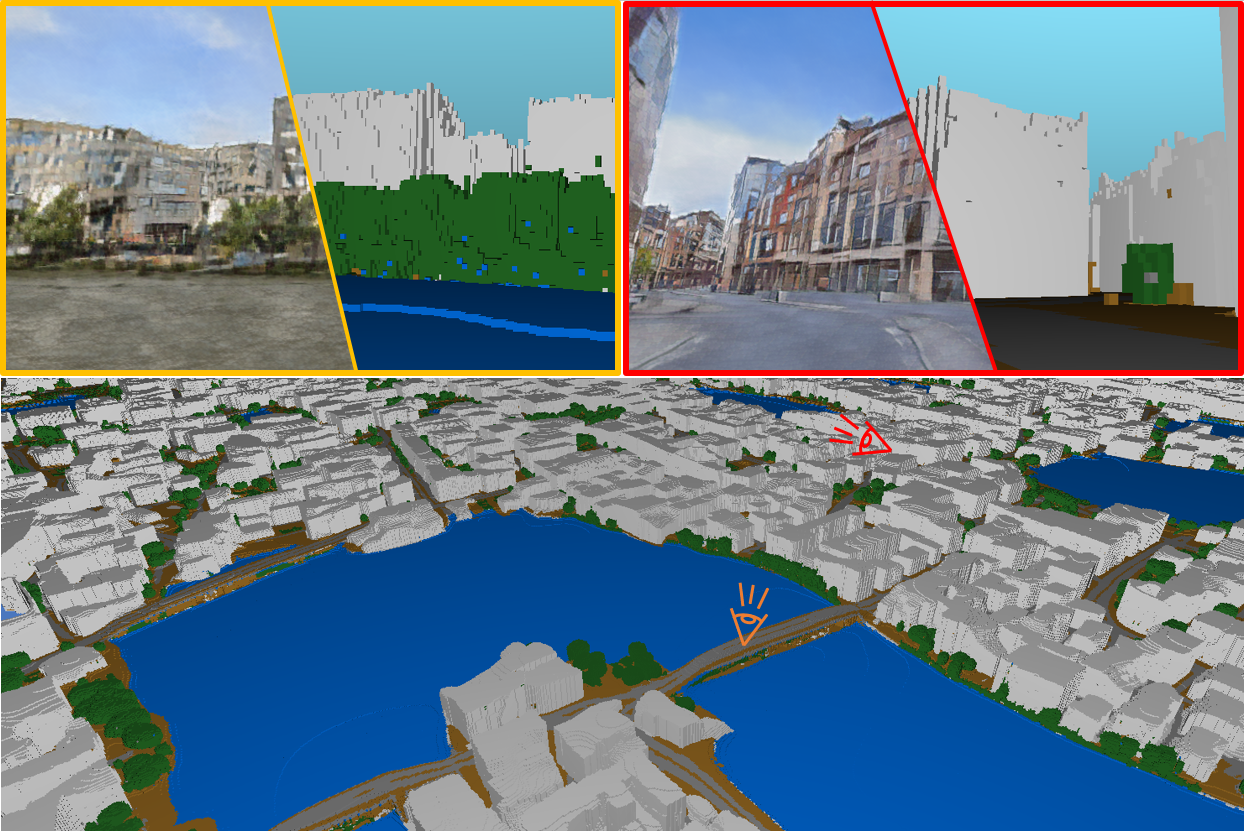

InfiniCity: Infinite-Scale City Synthesis

Chieh Hubert Lin, Hsin-Ying Lee, Willi Menapace, Menglei Chai, Aliaksandr Siarohin, Ming-Hsuan Yang, Sergey Tulyakov

ICCV 2023

[abs]

[paper]

[project page]

Toward infinite-scale 3D city synthesis, we propose a novel framework, InfiniCity,

which constructs and renders an unconstrainedly large and 3D-grounded environment from random noises.

InfiniCity decomposes the seemingly impractical task into three feasible modules, taking advantage of

both 2D and 3D data. First, an infinite-pixel image synthesis module generates arbitrary-scale 2D maps

from the bird's-eye view. Next, an octree-based voxel completion module lifts the generated 2D map to

3D octrees. Finally, a voxel-based neural rendering module texturizes the voxels and renders 2D images.

InfiniCity can thus synthesize arbitrary-scale and traversable 3D city environments, and allow flexible

and interactive editing from users. We quantitatively and qualitatively demonstrate the efficacy of the

proposed framework.

|

|

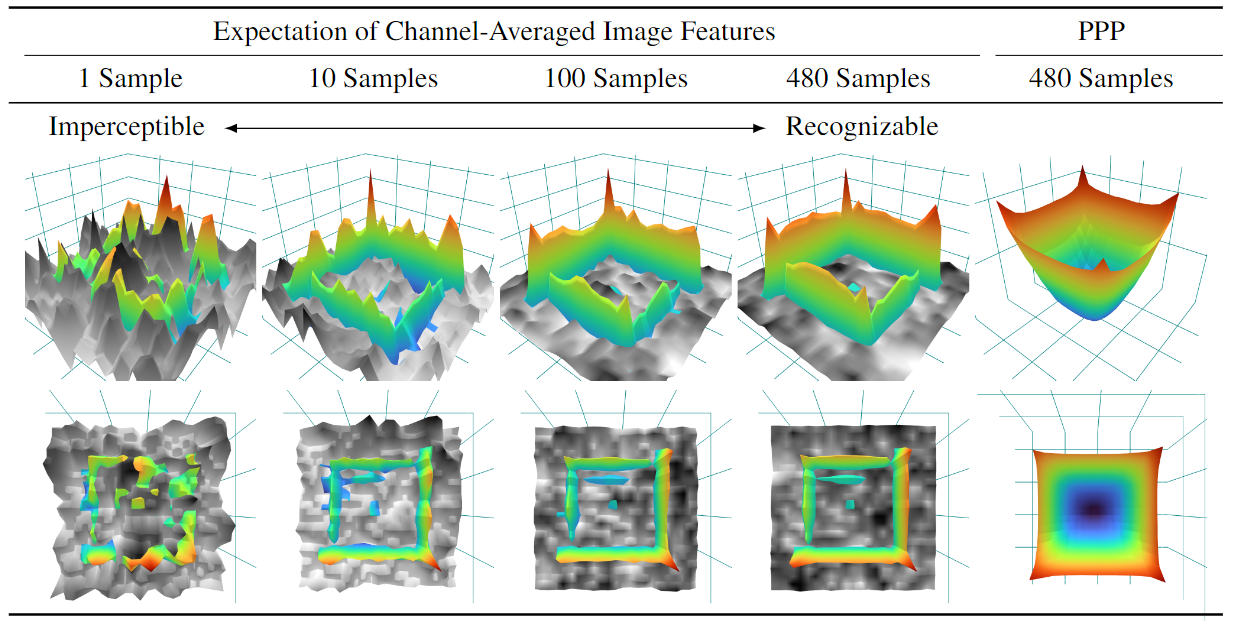

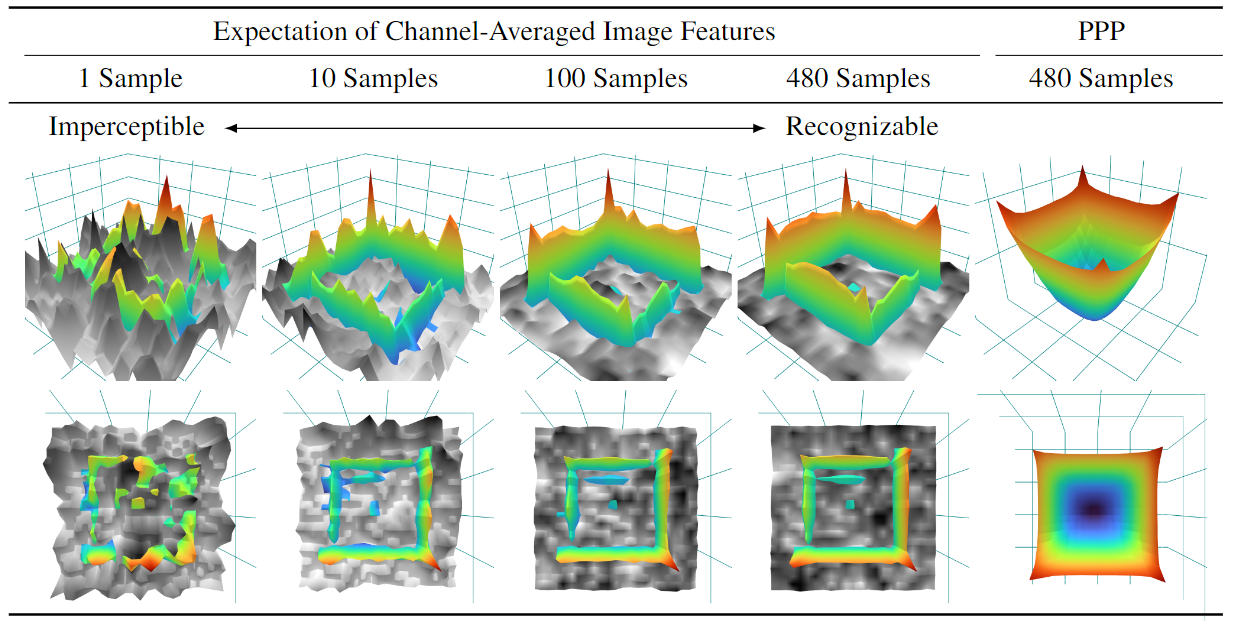

Unveiling The Mask of Position-Information Pattern Through the Mist of Image Features

Chieh Hubert Lin, Hsin-Ying Lee, Hung-Yu Tseng, Maneesh Singh, Ming-Hsuan Yang

ICML 2023

[abs]

[paper]

Recent studies have shown that paddings in convolutional neural networks encode

absolute position information which can negatively affect the model performance

for certain tasks. However, existing metrics for quantifying the strength of

positional information remain unreliable and frequently lead to erroneous results.

To address this issue, we propose novel metrics for measuring and visualizing

the encoded positional information. We formally define the encoded information

as Position-information Pattern from Padding (PPP) and conduct a series of

experiments to study its properties as well as its formation. The proposed metrics

measure the presence of positional information more reliably than the existing

metrics based on PosENet and tests in F-Conv. We also demonstrate that for any

extant (and proposed) padding schemes, PPP is primarily a learning artifact and is

less dependent on the characteristics of the underlying padding schemes.

|

|

InfinityGAN: Towards Infinite-Pixel Image Synthesis

Chieh Hubert Lin, Hsin-Ying Lee, Yen-Chi Cheng, Sergey Tulyakov, Ming-Hsuan Yang

ICLR 2022

[abs]

[paper]

[project page]

[codes (PyTorch)]

We present InfinityGAN, a method to generate arbitrary-sized images. The problem is associated with several key

challenges. First, scaling existing models to an arbitrarily large image size is resource-constrained, both in terms

of computation and availability of large-field-of-view training data. InfinityGAN trains and infers patch-by-patch

seamlessly with low computational resources. Second, large images should be locally and globally consistent, avoid

repetitive patterns, and look realistic. To address these, InfinityGAN takes global appearance, local structure and

texture into account. With this formulation, we can generate images with spatial size and level of detail not attainable

before. Experimental evaluation supports that InfinityGAN generates images with superior global structure compared to

baselines and features parallelizable inference. Finally, we show several applications unlocked by our approach, such as

fusing styles spatially, multi-modal outpainting and image inbetweening at arbitrary input and output sizes.

|

|

In&Out : Diverse Image Outpainting via GAN Inversion

Yen-Chi Cheng, Chieh Hubert Lin, Hsin-Ying Lee, Jian Ren, Sergey Tulyakov, Ming-Hsuan Yang

CVPR 2022

[abs]

[paper]

[project page]

[codes (TBA)]

Image outpainting seeks for a semantically consistent extension of the input image beyond its available content.

Compared to inpainting -- filling in missing pixels in a way coherent with the neighboring pixels -- outpainting

can be achieved in more diverse ways since the problem is less constrained by the surrounding pixels. Existing

image outpainting methods pose the problem as a conditional image-to-image translation task, often generating

repetitive structures and textures by replicating the content available in the input image. In this work, we formulate

the problem from the perspective of inverting generative adversarial networks. Our generator renders micro-patches

conditioned on their joint latent code as well as their individual positions in the image. To outpaint an image, we

seek for multiple latent codes not only recovering available patches but also synthesizing diverse outpainting by

patch-based generation. This leads to richer structure and content in the outpainted regions. Furthermore, our

formulation allows for outpainting conditioned on the categorical input, thereby enabling flexible user controls.

Extensive experimental results demonstrate the proposed method performs favorably against existing in- and outpainting

methods, featuring higher visual quality and diversity.

|

|

InstaNAS: Instance-aware Neural Architecture Search

An-Chieh Cheng*, Chieh Hubert Lin*, Da-Cheng Juan, Wei Wei, Min Sun

AAAI 2020

[abs]

[paper]

[project page (w/ demo)]

[codes]

Conventional Neural Architecture Search (NAS) aims at finding a single architecture that

achieves the best performance, which usually optimizes task related learning objectives such

as accuracy. However, a single architecture may not be representative enough for the whole

dataset with high diversity and variety. Intuitively, electing domain-expert architectures

that are proficient in domain-specific features can further benefit architecture related

objectives such as latency. In this paper, we propose InstaNAS---an instance-aware NAS

framework---that employs a controller trained to search for a "distribution of architectures"

instead of a single architecture; This allows the model to use sophisticated architectures

for the difficult samples, which usually comes with large architecture related cost, and

shallow architectures for those easy samples. During the inference phase, the controller

assigns each of the unseen input samples with a domain expert architecture that can achieve

high accuracy with customized inference costs. Experiments within a search space inspired by

MobileNetV2 show InstaNAS can achieve up to 48.8% latency reduction without compromising

accuracy on a series of datasets against MobileNetV2.

|

|

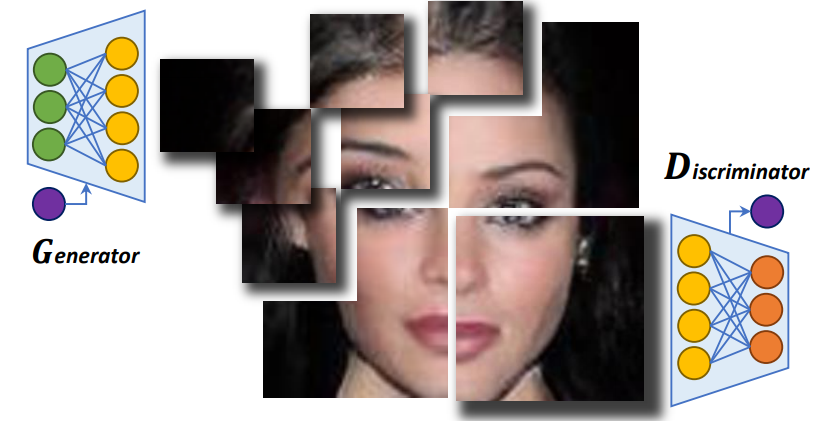

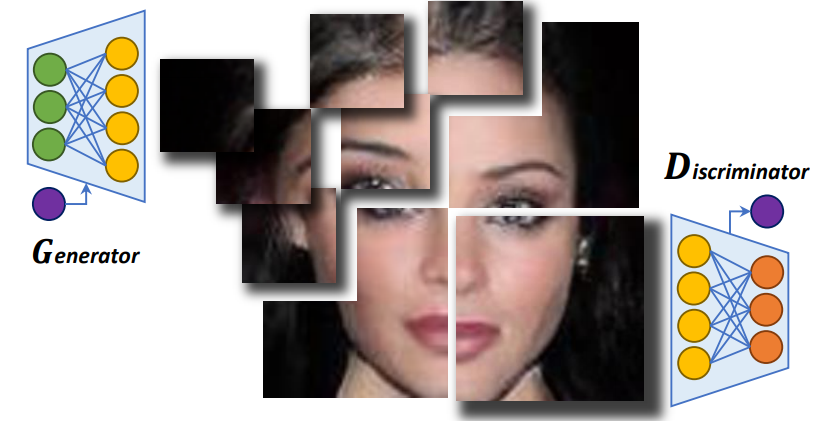

COCO-GAN: Generation by Parts via Conditional Coordinating

Chieh Hubert Lin, Chia-Che Chang, Yu-Sheng Chen, Da-Cheng Juan, Wei Wei, Hwann-Tzong Chen

ICCV 2019 (oral)

[abs]

[paper (low resolution)]

[paper (high resolution)]

[project page]

[codes]

Humans can only interact with part of the surrounding environment due to biological restrictions.

Therefore, we learn to reason the spatial relationships across a series of observations to piece

together the surrounding environment. Inspired by such behavior and the fact that machines also have

computational constraints, we propose COnditional COordinate GAN (COCO-GAN)

of which the generator generates images by parts based on their spatial coordinates as the condition.

On the other hand, the discriminator learns to justify realism across multiple assembled patches by

global coherence, local appearance, and edge-crossing continuity. Despite the full images are never

generated during training, we show that COCO-GAN can produce \textbf{state-of-the-art-quality}

full images during inference. We further demonstrate a variety of novel applications enabled by

teaching the network to be aware of coordinates. First, we perform extrapolation to the learned

coordinate manifold and generate off-the-boundary patches. Combining with the originally generated

full image, COCO-GAN can produce images that are larger than training samples, which we called

"beyond-boundary generation". We then showcase panorama generation within a cylindrical coordinate

system that inherently preserves horizontally cyclic topology. On the computation side, COCO-GAN

has a built-in divide-and-conquer paradigm that reduces memory requisition during training and

inference, provides high-parallelism, and can generate parts of images on-demand.

|

|

|

Point-to-Point Video Generation

Tsun-Hsuan Wang*, Yen-Chi Cheng*, Chieh Hubert Lin, Hwann-Tzong Chen, Min Sun

ICCV 2019

[abs]

[paper]

[project page]

[codes]

While image manipulation achieves tremendous breakthroughs (e.g., generating realistic faces) in

recent years, video generation is much less explored and harder to control, which limits its applications

in the real world. For instance, video editing requires temporal coherence across multiple clips and

thus poses both start and end constraints within a video sequence. We introduce point-to-point video

generation that controls the generation process with two control points: the targeted start- and end-frames.

The task is challenging since the model not only generates a smooth transition of frames, but also

plans ahead to ensure that the generated end-frame conforms to the targeted end-frame for videos of

various length. We propose to maximize the modified variational lower bound of conditional data

likelihood under a skip-frame training strategy. Our model can generate sequences such that their

end-frame is consistent with the targeted end-frame without loss of quality and diversity. Extensive

experiments are conducted on Stochastic Moving MNIST, Weizmann Human Action, and Human3.6M to evaluate

the effectiveness of the proposed method. We demonstrate our method under a series of scenarios

(e.g., dynamic length generation) and the qualitative results showcase the potential and merits of

point-to-point generation.

|

|

3D LiDAR and Stereo Fusion using Stereo Matching Network with Conditional Cost Volume Normalization

Tsun-Hsuan Wang, Hou-Ning Hu, Chieh Hubert Lin, Yi-Hsuan Tsai, Wei-Chen Chiu, Min Sun

IROS 2019

[abs]

[paper]

[project page]

[codes]

The complementary characteristics of active and passive depth sensing techniques motivate the

fusion of the Li-DAR sensor and stereo camera for improved depth perception. Instead of directly

fusing estimated depths across LiDAR and stereo modalities, we take advantages of the stereo

matching network with two enhanced techniques: Input Fusion and Conditional Cost Volume

Normalization (CCVNorm) on the LiDAR information. The proposed framework is generic and closely

integrated with the cost volume component that is commonly utilized in stereo matching neural networks.

We experimentally verify the efficacy and robustness of our method on the KITTI Stereo and Depth

Completion datasets, obtaining favorable performance against various fusion strategies. Moreover,

we demonstrate that, with a hierarchical extension of CCVNorm, the proposed method brings only slight

overhead to the stereo matching network in terms of computation time and model size.

|

|

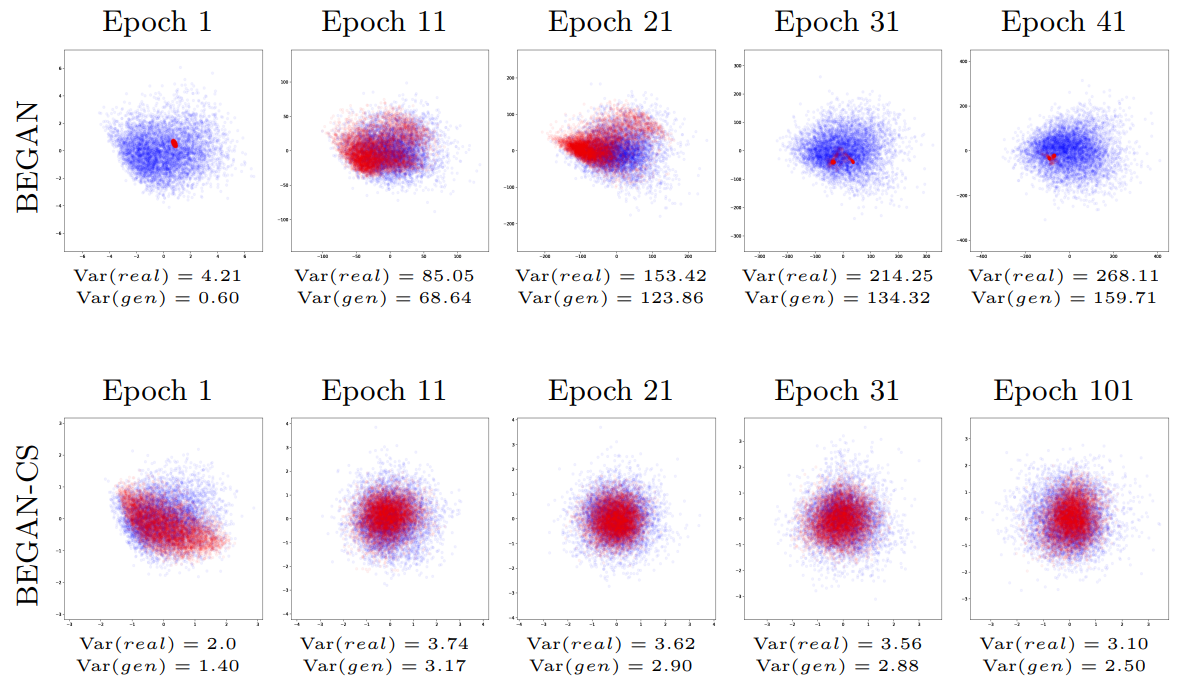

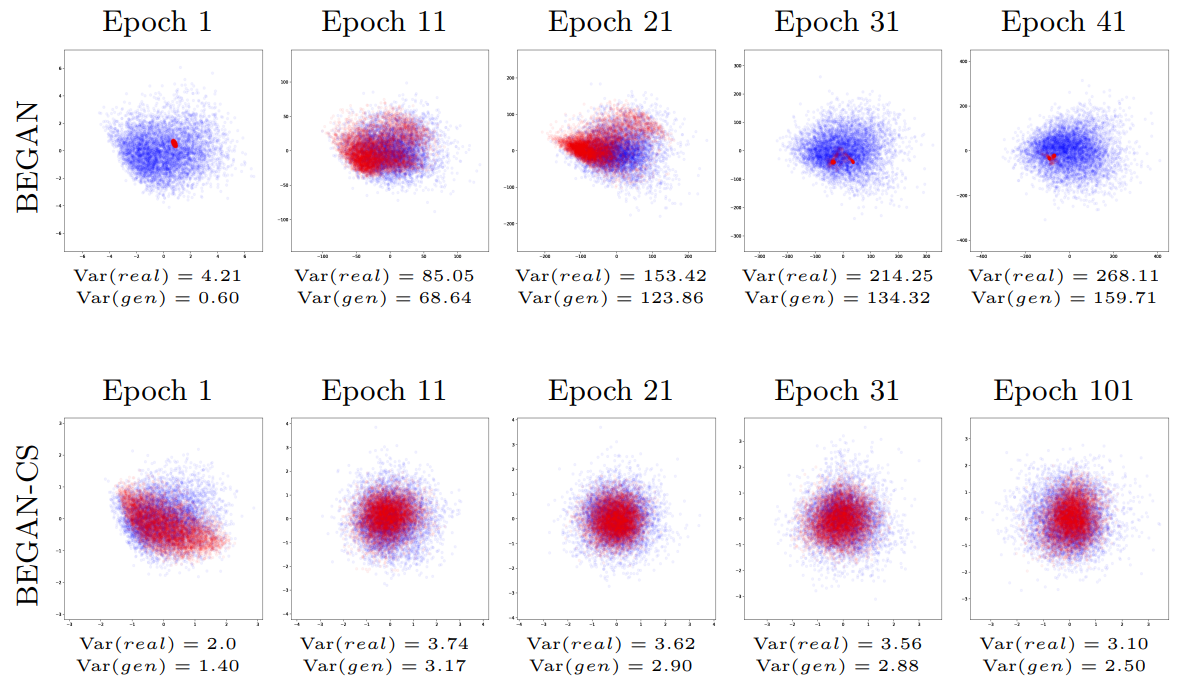

Escaping from Collapsing Modes in a Constrained Space

Chia-Che Chang*, Chieh Hubert Lin*, Che-Rung Lee, Da-Cheng Juan, Wei Wei, Hwann-Tzong Chen

ECCV 2018

[abs]

[paper]

[codes]

Generative adversarial networks (GANs) often suffer from unpredictable mode-collapsing during training.

We study the issue of mode collapse of Boundary Equilibrium Generative Adversarial Network (BEGAN),

which is one of the state-of-the-art generative models. Despite its potential of generating high-quality images,

we find that BEGAN tends to collapse at some modes after a period of training. We propose a new model,

called BEGAN with a Constrained Space (BEGAN-CS), which includes a latent-space constraint in the

loss function. We show that BEGAN-CS can significantly improve training stability and suppress mode collapse

without either increasing the model complexity or degrading the image quality. Further, we visualize the distribution

of latent vectors to elucidate the effect of latent-space constraint. The experimental results show that our method has

additional advantages of being able to train on small datasets and to generate images similar to a given real

image yet with variations of designated attributes on-the-fly.

|